By Robert Walker, CEO & Founder, Surveys & Forecasts, LLC, Norwalk, Connecticut, rww@safllc.com

If people suspect bias in a research study, it leads to a lack of confidence in the results. Unfortunately, research bias exists in many forms and in more ways than we would like to admit. In research, bias refers to any factor that abnormally distorts data, results, or conclusions. As researchers, our job is to not only understand bias but to find ways to either control or offset it. After all, it is the negative impact of bias that we most want to avoid, such as inaccurate conclusions or misleading insights.

Additionally, bias is not limited to any one specific research approach. Sources of bias exist in quantitative and qualitative research and can occur at any stage of the research process, from initial problem definition to making client recommendations. Each of us brings our unique perspective based on personal experiences and what we believe to be true or not. The insidious thing about bias is that we won’t always anticipate it, nor will we recognize it even

Additionally, bias is not limited to any one specific research approach. Sources of bias exist in quantitative and qualitative research and can occur at any stage of the research process, from initial problem definition to making client recommendations. Each of us brings our unique perspective based on personal experiences and what we believe to be true or not. The insidious thing about bias is that we won’t always anticipate it, nor will we recognize it even

as it is happening!

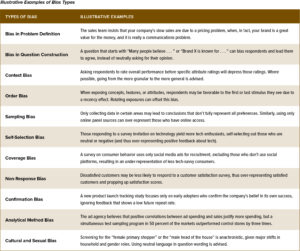

This article explores some of the more well-known types of bias that commonly arise in marketing research studies and offers suggestions on how you can avoid these errors. We also provide a reference table that lists these biases, summarizes common errors, and provides ways to avoid the negative impact of bias in your research practice. Let’s take a look at some of the more common forms of bias, from initial research design to analysis and interpretation.

Bias in Problem Definition

The foundation of any research project starts with the problem definition and the approach you will take to address it. This is the first point at which bias can creep in. A research problem framed too narrowly or based on preconceived assumptions can lead to a study that simply validates preexisting (and potentially wrong) beliefs rather than uncovering new insights.

Incorrect assumptions about the source of a marketing problem are one form of bias. For example, a company’s sales team believes that weak sales of a flagship brand are due to a high price point. This belief led to promotional campaign testing instead of executing a more broadly defined brand image study. Had the appropriate research been conducted, management would have learned that the brand actually represents a great value, but that there was little consumer knowledge of the value story. The company spent time and money to solve the wrong problem based on an incorrect assumption.

An over-reliance on industry trends can also skew the problem definition. For example, a company’s management team believes that future trends in marketing support more spending with social media influencers. The company decided to spend heavily with several social media influencers. A post-campaign analysis proved that the extra spending never reached their more broadly defined audience and did not fully capture market diversity.

To mitigate this, it is crucial to:

- Engage diverse stakeholders who can challenge our own assumptions.

- Conduct exploratory research to broaden understanding and build hypotheses.

- Frame your problem definition around the consumer’s mindset, not preconceived beliefs.

Bias in Question Construction

Once the problem is defined, another potential source of bias is in question construction. Subtle changes in phrasing can lead to drastically different responses, and leading questions can direct respondents toward a particular answer. Asking, “Wouldn’t you agree that our customer service is exceptional?” encourages a positive and biased response and downplays negative feedback. This is often seen in satisfaction surveys that impact employee compensation, where the recipient of the feedback is coaching the respondent to provide good ratings (i.e., “Please rate us highly!”).

Loaded questions, which embed assumptions, are similarly problematic. For example, asking, “How much do you spend on luxury skincare products each month?” presumes that the respondent purchases products in the luxury skincare category, which can alienate respondents or overstate data.

Double-barreled questions (i.e., two questions in one) are also a common error. For example, “How satisfied are you with our product quality and customer service?” Mixing two separate aspects in one question can confuse respondents and lead to unclear data, as they may have different opinions of the two aspects.

To avoid these errors:

- Use neutral language in all questions.

- Pilot test surveys to identify unintended biases.

- Ensure each question focuses on a single issue, avoiding double-barreled phrasing.

Sample Selection and Coverage Bias

Bias in sample selection or coverage areas can severely distort the findings of marketing research. Sampling bias occurs when certain groups are over- or underrepresented in screening, sample definition, or geographic area. For instance, focusing a survey solely on urban customers may overlook rural or suburban buyers, leading to unrepresentative results for a national campaign.

Similarly, self-selection bias can occur when individuals who choose to participate are meaningfully different from those who do not. Customers with strong positive or negative opinions may be more likely to respond, while those with neutral opinions might remain silent, skewing the results toward the extremes. Incentives may increase participation but also have the potential to attract only those who are motivated by financial rewards.

One approach to overcoming coverage and sampling bias is to use quotas for specific subgroups and then weight the subgroups into their proper proportions. However, there is also a risk of over- or under-weighting some groups at the expense of others.

Addressing these issues requires:

- Random sampling to ensure all relevant groups are included.

- Alternatively, stratified sampling to ensure a proportional representation of key subgroups.

- Considering demographic and geographic coverage to prevent skewed results.

Avoiding Cultural and Sexual Bias

Cultural and sexual bias is an overlooked issue in survey design. Surveys that fail to acknowledge cultural differences or that make assumptions about gender and sexuality can alienate respondents and result in inaccurate or incomplete data. For example, questions that assume traditional family structures or fail to recognize nonbinary gender identities can alienate a significant portion of respondents and introduce bias into the research.

Common errors in this area include using language that assumes heteronormative relationships or ignores cultural differences in lifestyle, values, or product use. A question about alcohol consumption, for example, may not be relevant in cultures where alcohol is forbidden or stigmatized. Questions about childcare or child-rearing should not automatically be asked of a female in the household, in the same way that questions about home maintenance ought not to be asked only of a male in the household.

Over-generalizing cultural experiences or stereotyping is another area to be aware of and perhaps requires us to be the most self-aware in terms of how we think and phrase questions. For instance, assuming that all respondents from a particular ethnic background share the same cultural preferences, buying behaviors, or brand choices is a bias that can distort our research.

To avoid cultural and sexual bias, researchers should:

- Use inclusive, neutral language that does not assume a specific gender or sexual orientation.

- Be sensitive to cultural differences, avoiding questions that may be irrelevant or offensive to certain groups.

- Ensure that surveys are tested on diverse groups before widespread deployment to identify potential biases in question phrasing.

Context and Order Bias

Context bias occurs when responses to a question are influenced by earlier questions or stimuli during the survey. If a respondent is first asked how satisfied they are with customer service, their response to a later question about overall brand satisfaction may be disproportionately influenced by that prior focus on customer service. Similarly, asking about overall satisfaction before asking about attributes or other detailed items can create a biasing effect. Hence, proper question sequencing can avoid these traps.

Order bias is a specific form of context bias in which the order of questions or response options affects how respondents answer. This can often be mitigated by a rotation of question blocks or specific attributes within a ratings section. A primacy effect occurs when respondents favor the first option presented; a recency effect occurs when respondents are persuaded by the last option shown. For example, respondents who are shown too many options may disproportionately choose the first or last one, depending on how the items are presented. In the same way, concepts that are shown first often are typically rated higher than those that are shown in other positions.

Attribute salience bias occurs when certain attributes are made more prominent, causing respondents to prioritize that feature in their decision-making. We should strive to assess all attributes equally, also known as “balanced framing.” For example, price is a very powerful factor in all buying decisions. If we single out price as a separate question, we run the risk of making the respondent very price-conscious, such as with the question “How important is price in your buying decisions?” versus asking about price in a ratings grid along with many other attributes.

To minimize the impact of context and order bias:

- Randomize the order of questions and response options within a ratings section, unless the options are intended to

be chronological. - Group related questions to limit the

influence of unrelated preceding questions. - Use balanced framing to avoid highlighting specific attributes over others.

Summary

Bias is everywhere and cannot be fully eliminated. But, when we are aware of the forms it can take, we can implement ways to mitigate its potential negative impact. Obviously, the most profound negative impact of bias is that we simply get it wrong—in other words, we either come to an incorrect conclusion or, worse, one that is damaging to our client’s business.

As researchers, it is our responsibility to identify, and be on the lookout for, the negative effects of bias. Don’t be afraid to challenge preexisting approaches or entrenched ways of thinking. From ensuring good research design and representative sample selection

to constructing neutral, balanced questions, researchers have many tools to reduce bias at every stage of the research process!